Deep Learning Resources

ImageNet

Single-model on 224x224

| Method | top1 | top5 | Model Size | Speed |

|---|---|---|---|---|

| ResNet-101 | 78.0% | 94.0% | ||

| ResNet-200 | 78.3% | 94.2% | ||

| Inception-v3 | ||||

| Inception-v4 | ||||

| Inception-ResNet-v2 | ||||

| ResNet-50 | 77.8% | |||

| ResNet-101 | 79.6% | 94.7% |

Single-model on 320×320 / 299×299

| Method | top1 | top5 | Model Size | Speed |

|---|---|---|---|---|

| ResNet-101 | ||||

| ResNet-200 | 79.9% | 95.2% | ||

| Inception-v3 | 78.8% | 94.4% | ||

| Inception-v4 | 80.0% | 95.0% | ||

| Inception-ResNet-v2 | 80.1% | 95.1% | ||

| ResNet-50 | ||||

| ResNet-101 | 80.9% | 95.6% |

AlexNet

ImageNet Classification with Deep Convolutional Neural Networks

- nips-page: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-

- paper: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- slides: http://www.image-net.org/challenges/LSVRC/2012/supervision.pdf

- code: https://code.google.com/p/cuda-convnet/

- github: https://github.com/dnouri/cuda-convnet

- code: https://code.google.com/p/cuda-convnet2/

Network In Network

Network In Network

- intro: ICLR 2014

- arxiv: http://arxiv.org/abs/1312.4400

- gitxiv: http://gitxiv.com/posts/PA98qGuMhsijsJzgX/network-in-network-nin

- code(Caffe, official): https://gist.github.com/mavenlin/d802a5849de39225bcc6

Batch-normalized Maxout Network in Network

GoogLeNet (Inception V1)

Going Deeper with Convolutions

- arxiv: http://arxiv.org/abs/1409.4842

- github: https://github.com/google/inception

- github: https://github.com/soumith/inception.torch

Building a deeper understanding of images

VGGNet

Very Deep Convolutional Networks for Large-Scale Image Recognition

- homepage: http://www.robots.ox.ac.uk/~vgg/research/very_deep/

- arxiv: http://arxiv.org/abs/1409.1556

- slides: http://llcao.net/cu-deeplearning15/presentation/cc3580_Simonyan.pptx

- slides: http://www.robots.ox.ac.uk/~karen/pdf/ILSVRC_2014.pdf

- slides: http://deeplearning.cs.cmu.edu/slides.2015/25.simonyan.pdf

- github(official, deprecated Caffe API): https://gist.github.com/ksimonyan/211839e770f7b538e2d8

- github: https://github.com/ruimashita/caffe-train

Tensorflow VGG16 and VGG19

Inception-V2

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

- intro: ImageNet top-5 error: 4.82%

- keywords: internal covariate shift problem

- arxiv: http://arxiv.org/abs/1502.03167

- blog: https://standardfrancis.wordpress.com/2015/04/16/batch-normalization/

- notes: http://blog.csdn.net/happynear/article/details/44238541

- github: https://github.com/lim0606/caffe-googlenet-bn

ImageNet pre-trained models with batch normalization

- arxiv: https://arxiv.org/abs/1612.01452

- project page: http://www.inf-cv.uni-jena.de/Research/CNN+Models.html

- github: https://github.com/cvjena/cnn-models

Inception-V3

Inception-V3 = Inception-V2 + BN-auxiliary (fully connected layer of the auxiliary classifier is also batch-normalized, not just the convolutions)

Rethinking the Inception Architecture for Computer Vision

- intro: “21.2% top-1 and 5.6% top-5 error for single frame evaluation using a network; 3.5% top-5 error and 17.3% top-1 error With an ensemble of 4 models and multi-crop evaluation.”

- arxiv: http://arxiv.org/abs/1512.00567

- github: https://github.com/Moodstocks/inception-v3.torch

Inception in TensorFlow

- intro: demonstrate how to train the Inception v3 architecture

- github: https://github.com/tensorflow/models/tree/master/inception

Train your own image classifier with Inception in TensorFlow

- intro: Inception-v3

- blog: https://research.googleblog.com/2016/03/train-your-own-image-classifier-with.html

Notes on the TensorFlow Implementation of Inception v3

Training an InceptionV3-based image classifier with your own dataset

Inception-BN full for Caffe: Inception-BN ImageNet (21K classes) model for Caffe

ResNet

Deep Residual Learning for Image Recognition

- intro: CVPR 2016 Best Paper Award

- arxiv: http://arxiv.org/abs/1512.03385

- slides: http://research.microsoft.com/en-us/um/people/kahe/ilsvrc15/ilsvrc2015_deep_residual_learning_kaiminghe.pdf

- gitxiv: http://gitxiv.com/posts/LgPRdTY3cwPBiMKbm/deep-residual-learning-for-image-recognition

- github: https://github.com/KaimingHe/deep-residual-networks

- github: https://github.com/ry/tensorflow-resnet

Third-party re-implementations

https://github.com/KaimingHe/deep-residual-networks#third-party-re-implementations

Training and investigating Residual Nets

- intro: Facebook AI Research

- blog: http://torch.ch/blog/2016/02/04/resnets.html

- github: https://github.com/facebook/fb.resnet.torch

resnet.torch: an updated version of fb.resnet.torch with many changes.

Highway Networks and Deep Residual Networks

Interpretating Deep Residual Learning Blocks as Locally Recurrent Connections

Lab41 Reading Group: Deep Residual Learning for Image Recognition

50-layer ResNet, trained on ImageNet, classifying webcam

- homepage: https://ml4a.github.io/demos/keras.js/

Reproduced ResNet on CIFAR-10 and CIFAR-100 dataset.

ResNet-V2

Identity Mappings in Deep Residual Networks

- intro: ECCV 2016. ResNet-v2

- arxiv: http://arxiv.org/abs/1603.05027

- github: https://github.com/KaimingHe/resnet-1k-layers

- github: https://github.com/tornadomeet/ResNet

Deep Residual Networks for Image Classification with Python + NumPy

Inception-V4 / Inception-ResNet-V2

Inception-V4, Inception-Resnet And The Impact Of Residual Connections On Learning

- intro: Workshop track - ICLR 2016. 3.08 % top-5 error on ImageNet CLS

- intro: “achieve 3.08% top-5 error on the test set of the ImageNet classification (CLS) challenge”

- arxiv: http://arxiv.org/abs/1602.07261

- github(Keras): https://github.com/kentsommer/keras-inceptionV4

The inception-resnet-v2 models trained from scratch via torch

Inception v4 in Keras

- intro: Inception-v4, Inception - Resnet-v1 and v2

- github: https://github.com/titu1994/Inception-v4

ResNeXt

Aggregated Residual Transformations for Deep Neural Networks

- intro: CVPR 2017. UC San Diego & Facebook AI Research

- arxiv: https://arxiv.org/abs/1611.05431

- github(Torch): https://github.com/facebookresearch/ResNeXt

- github: https://github.com/dmlc/mxnet/blob/master/example/image-classification/symbol/resnext.py

- dataset: http://data.dmlc.ml/models/imagenet/resnext/

- reddit: https://www.reddit.com/r/MachineLearning/comments/5haml9/p_implementation_of_aggregated_residual/

Residual Networks Variants

Resnet in Resnet: Generalizing Residual Architectures

- paper: http://beta.openreview.net/forum?id=lx9l4r36gU2OVPy8Cv9g

- arxiv: http://arxiv.org/abs/1603.08029

Residual Networks are Exponential Ensembles of Relatively Shallow Networks

Wide Residual Networks

- intro: BMVC 2016

- arxiv: http://arxiv.org/abs/1605.07146

- github: https://github.com/szagoruyko/wide-residual-networks

- github: https://github.com/asmith26/wide_resnets_keras

- github: https://github.com/ritchieng/wideresnet-tensorlayer

- github: https://github.com/xternalz/WideResNet-pytorch

- github(Torch): https://github.com/meliketoy/wide-residual-network

Residual Networks of Residual Networks: Multilevel Residual Networks

Multi-Residual Networks

Deep Pyramidal Residual Networks

- intro: PyramidNet

- arxiv: https://arxiv.org/abs/1610.02915

- github: https://github.com/jhkim89/PyramidNet

Learning Identity Mappings with Residual Gates

Wider or Deeper: Revisiting the ResNet Model for Visual Recognition

- intro: image classification, semantic image segmentation

- arxiv: https://arxiv.org/abs/1611.10080

- github: https://github.com/itijyou/ademxapp

Deep Pyramidal Residual Networks with Separated Stochastic Depth

Spatially Adaptive Computation Time for Residual Networks

- intro: Higher School of Economics & Google & CMU

- arxiv: https://arxiv.org/abs/1612.02297

ShaResNet: reducing residual network parameter number by sharing weights

Sharing Residual Units Through Collective Tensor Factorization in Deep Neural Networks

- intro: Collective Residual Networks

- arxiv: https://arxiv.org/abs/1703.02180

- github(MXNet): https://github.com/cypw/CRU-Net

Residual Attention Network for Image Classification

- intro: CVPR 2017 Spotlight. SenseTime Group Limited & Tsinghua University & The Chinese University of Hong Kong

- intro: ImageNet (4.8% single model and single crop, top-5 error)

- arxiv: https://arxiv.org/abs/1704.06904

- github(Caffe): https://github.com/buptwangfei/residual-attention-network

Dilated Residual Networks

- intro: CVPR 2017. Princeton University & Intel Labs

- keywords: Dilated Residual Networks (DRN)

- project page: http://vladlen.info/publications/dilated-residual-networks/

- arxiv: https://arxiv.org/abs/1705.09914

- paper: http://vladlen.info/papers/DRN.pdf

Dynamic Steerable Blocks in Deep Residual Networks

- intro: University of Amsterdam & ESAT-PSI

- arxiv: https://arxiv.org/abs/1706.00598

Learning Deep ResNet Blocks Sequentially using Boosting Theory

- intro: Microsoft Research & Princeton University

- arxiv: https://arxiv.org/abs/1706.04964

Learning Strict Identity Mappings in Deep Residual Networks

- keywords: epsilon-ResNet

- arxiv: https://arxiv.org/abs/1804.01661

Spiking Deep Residual Network

https://arxiv.org/abs/1805.01352

DenseNet

Densely Connected Convolutional Networks

- intro: CVPR 2017 best paper. Cornell University & Tsinghua University. DenseNet

- arxiv: http://arxiv.org/abs/1608.06993

- github: https://github.com/liuzhuang13/DenseNet

- github(Lasagne): https://github.com/Lasagne/Recipes/tree/master/papers/densenet

- github(Keras): https://github.com/tdeboissiere/DeepLearningImplementations/tree/master/DenseNet

- github(Caffe): https://github.com/liuzhuang13/DenseNetCaffe

- github(Tensorflow): https://github.com/YixuanLi/densenet-tensorflow

- github(Keras): https://github.com/titu1994/DenseNet

- github(PyTorch): https://github.com/bamos/densenet.pytorch

- github(PyTorch): https://github.com/andreasveit/densenet-pytorch

- github(Tensorflow): https://github.com/ikhlestov/vision_networks

Memory-Efficient Implementation of DenseNets

- intro: Cornell University & Fudan University & Facebook AI Research

- arxiv: https://arxiv.org/abs/1707.06990

- github: https://github.com/liuzhuang13/DenseNet/tree/master/models

- github: https://github.com/gpleiss/efficient_densenet_pytorch

- github: https://github.com/taineleau/efficient_densenet_mxnet

- github: https://github.com/Tongcheng/DN_CaffeScript

DenseNet 2.0

CondenseNet: An Efficient DenseNet using Learned Group Convolutions

Xception

Deep Learning with Separable Convolutions

Xception: Deep Learning with Depthwise Separable Convolutions

- intro: CVPR 2017. Extreme Inception

- arxiv: https://arxiv.org/abs/1610.02357

- code: https://keras.io/applications/#xception

- github(Keras): https://github.com/fchollet/deep-learning-models/blob/master/xception.py

- github: https://gist.github.com/culurciello/554c8e56d3bbaf7c66bf66c6089dc221

- github: https://github.com/kwotsin/Tensorflow-Xception

- github: https://github.com//bruinxiong/xception.mxnet

- notes: http://www.shortscience.org/paper?bibtexKey=journals%2Fcorr%2F1610.02357

Towards a New Interpretation of Separable Convolutions

MobileNets

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- intro: Google

- arxiv: https://arxiv.org/abs/1704.04861

- github: https://github.com/rcmalli/keras-mobilenet

- github: https://github.com/marvis/pytorch-mobilenet

- github(Tensorflow): https://github.com/Zehaos/MobileNet

- github: https://github.com/shicai/MobileNet-Caffe

- github: https://github.com/hollance/MobileNet-CoreML

- github: https://github.com/KeyKy/mobilenet-mxnet

MobileNets: Open-Source Models for Efficient On-Device Vision

- blog: https://research.googleblog.com/2017/06/mobilenets-open-source-models-for.html

- github: https://github.com/tensorflow/models/blob/master/slim/nets/mobilenet_v1.md

Google’s MobileNets on the iPhone

- blog: http://machinethink.net/blog/googles-mobile-net-architecture-on-iphone/

- github: https://github.com/hollance/MobileNet-CoreML

ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

- intro: Megvii Inc (Face++)

- arxiv: https://arxiv.org/abs/1707.01083

Depth_conv-for-mobileNet

https://github.com//LamHoCN/Depth_conv-for-mobileNet

The Enhanced Hybrid MobileNet

https://arxiv.org/abs/1712.04698

FD-MobileNet: Improved MobileNet with a Fast Downsampling Strategy

https://arxiv.org/abs/1802.03750

A Quantization-Friendly Separable Convolution for MobileNets

- intro: THE 1ST WORKSHOP ON ENERGY EFFICIENT MACHINE LEARNING AND COGNITIVE COMPUTING FOR EMBEDDED APPLICATIONS (EMC2)

- arxiv: https://arxiv.org/abs/1803.08607

MobileNetV2

Inverted Residuals and Linear Bottlenecks: Mobile Networks forClassification, Detection and Segmentation

- intro: Google

- keywords: MobileNetV2, SSDLite, DeepLabv3

- arxiv: https://arxiv.org/abs/1801.04381

- github: https://github.com/tensorflow/models/tree/master/research/slim/nets/mobilenet

- blog: https://research.googleblog.com/2018/04/mobilenetv2-next-generation-of-on.html

SENet

Squeeze-and-Excitation Networks

- intro: ILSVRC 2017 image classification winner. Momenta & University of Oxford

- arxiv: https://arxiv.org/abs/1709.01507

- github(official, Caffe): https://github.com/hujie-frank/SENet

- github: https://github.com/bruinxiong/SENet.mxnet

ImageNet Projects

Training an Object Classifier in Torch-7 on multiple GPUs over ImageNet

- intro: an imagenet example in torch

- github: https://github.com/soumith/imagenet-multiGPU.torch

Semi-Supervised Learning

Semi-Supervised Learning with Graphs

- intro: Label Propagation

- paper: http://pages.cs.wisc.edu/~jerryzhu/pub/thesis.pdf

- blog(“标签传播算法(Label Propagation)及Python实现”): http://blog.csdn.net/zouxy09/article/details/49105265

Semi-Supervised Learning with Ladder Networks

- arxiv: http://arxiv.org/abs/1507.02672

- github: https://github.com/CuriousAI/ladder

- github: https://github.com/rinuboney/ladder

Semi-supervised Feature Transfer: The Practical Benefit of Deep Learning Today?

Temporal Ensembling for Semi-Supervised Learning

- intro: ICLR 2017

- arxiv: https://arxiv.org/abs/1610.02242

- github: https://github.com/smlaine2/tempens

Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data

- intro: ICLR 2017 best paper award

- arxiv: https://arxiv.org/abs/1610.05755

- github: https://github.com/tensorflow/models/tree/8505222ea1f26692df05e65e35824c6c71929bb5/privacy

Infinite Variational Autoencoder for Semi-Supervised Learning

Transfer Learning

Discriminative Transfer Learning with Tree-based Priors

- intro: NIPS 2013

- paper: http://deeplearning.net/wp-content/uploads/2013/03/icml13_workshop.pdf

- paper: http://www.cs.toronto.edu/~nitish/treebasedpriors.pdf

How transferable are features in deep neural networks?

- intro: NIPS 2014

- arxiv: http://arxiv.org/abs/1411.1792

- paper: http://papers.nips.cc/paper/5347-how-transferable-are-features-in-deep-neural-networks.pdf

- github: https://github.com/yosinski/convnet_transfer

Learning and Transferring Mid-Level Image Representations using Convolutional Neural Networks

Learning Transferable Features with Deep Adaptation Networks

- intro: ICML 2015

- arxiv: https://arxiv.org/abs/1502.02791

- gihtub: https://github.com/caoyue10/icml-caffe

Transferring Knowledge from a RNN to a DNN

- intro: CMU

- arxiv: https://arxiv.org/abs/1504.01483

Simultaneous Deep Transfer Across Domains and Tasks

- intro: ICCV 2015

- arxiv: http://arxiv.org/abs/1510.02192

Net2Net: Accelerating Learning via Knowledge Transfer

- arxiv: http://arxiv.org/abs/1511.05641

- github: https://github.com/soumith/net2net.torch

- notes(by Hugo Larochelle): https://www.evernote.com/shard/s189/sh/46414718-9663-440e-bbb7-65126b247b42/19688c438709251d8275d843b8158b03

Transfer Learning from Deep Features for Remote Sensing and Poverty Mapping

A theoretical framework for deep transfer learning

- key words: transfer learning, PAC learning, PAC-Bayesian, deep learning

- homepage: http://imaiai.oxfordjournals.org/content/early/2016/04/28/imaiai.iaw008

- paper: http://imaiai.oxfordjournals.org/content/early/2016/04/28/imaiai.iaw008.full.pdf

Transfer learning using neon

Hyperparameter Transfer Learning through Surrogate Alignment for Efficient Deep Neural Network Training

What makes ImageNet good for transfer learning?

- project page: http://minyounghuh.com/papers/analysis/

- arxiv: http://arxiv.org/abs/1608.08614

Fine-tuning a Keras model using Theano trained Neural Network & Introduction to Transfer Learning

Multi-source Transfer Learning with Convolutional Neural Networks for Lung Pattern Analysis

Borrowing Treasures from the Wealthy: Deep Transfer Learning through Selective Joint Fine-tuning

- intro: CVPR 2017. The University of Hong Kong

- arxiv: https://arxiv.org/abs/1702.08690

Optimal Transport for Deep Joint Transfer Learning

https://arxiv.org/abs/1709.02995

Transfer Learning with Binary Neural Networks

- intro: Machine Learning on the Phone and other Consumer Devices, NIPS2017 Workshop

- arxiv: https://arxiv.org/abs/1711.10761

Gradual Tuning: a better way of Fine Tuning the parameters of a Deep Neural Network

- intro: Université Paris Descartes, Paris

- arxiv: https://arxiv.org/abs/1711.10177

Born Again Neural Networks

- intro: University of Southern California & CMU & Amazon AI

- paper: http://metalearning.ml/papers/metalearn17_furlanello.pdf

One Shot Learning

One-shot Learning with Memory-Augmented Neural Networks

- intro: Google DeepMind

- arxiv: https://arxiv.org/abs/1605.06065

- github(Tensorflow): https://github.com/hmishra2250/NTM-One-Shot-TF

- note: http://rylanschaeffer.github.io/content/research/one_shot_learning_with_memory_augmented_nn/main.html

Matching Networks for One Shot Learning

- intro: Google DeepMind

- arxiv: https://arxiv.org/abs/1606.04080

- notes: https://blog.acolyer.org/2017/01/03/matching-networks-for-one-shot-learning/

Learning feed-forward one-shot learners [NIPS 2016] [VALSE seminar]

Generative Adversarial Residual Pairwise Networks for One Shot Learning

- intro: Indian Institute of Science

- arxiv: https://arxiv.org/abs/1703.08033

Few-Shot Learning

Optimization as a Model for Few-Shot Learning

- intro: Twitter

- paper: https://openreview.net/pdf?id=rJY0-Kcll

- github: https://github.com/twitter/meta-learning-lstm

Learning to Compare: Relation Network for Few-Shot Learning

- intro: Queen Mary University of London & The University of Edinburgh

- arxiv: https://arxiv.org/abs/1711.06025

Unleashing the Potential of CNNs for Interpretable Few-Shot Learning

- intro: Beihang University & Johns Hopkins University

- arxiv: https://arxiv.org/abs/1711.08277

Low-Shot Learning from Imaginary Data

- intro: Facebook AI Research (FAIR) & CMU & Cornell University

- arxiv: https://arxiv.org/abs/1801.05401

Semantic Feature Augmentation in Few-shot Learning

- keywords: TriNet

- arxiv: https://arxiv.org/abs/1804.05298

- github: https://github.com/tankche1/Semantic-Feature-Augmentation-in-Few-shot-Learning

Multi-label Learning

CNN: Single-label to Multi-label

Deep Learning for Multi-label Classification

- arxiv: http://arxiv.org/abs/1502.05988

- github: http://meka.sourceforge.net

Predicting Unseen Labels using Label Hierarchies in Large-Scale Multi-label Learning

- intro: ECML 2015

- paper: https://www.kdsl.tu-darmstadt.de/fileadmin/user_upload/Group_KDSL/PUnL_ECML2015_camera_ready.pdf

Learning with a Wasserstein Loss

- project page: http://cbcl.mit.edu/wasserstein/

- arxiv: http://arxiv.org/abs/1506.05439

- code: http://cbcl.mit.edu/wasserstein/yfcc100m_labels.tar.gz

- MIT news: http://news.mit.edu/2015/more-flexible-machine-learning-1001

From Softmax to Sparsemax: A Sparse Model of Attention and Multi-Label Classification

- intro: ICML 2016

- arxiv: http://arxiv.org/abs/1602.02068

- github: https://github.com/gokceneraslan/SparseMax.torch

- github: https://github.com/Unbabel/sparsemax

CNN-RNN: A Unified Framework for Multi-label Image Classification

Improving Multi-label Learning with Missing Labels by Structured Semantic Correlations

Extreme Multi-label Loss Functions for Recommendation, Tagging, Ranking & Other Missing Label Applications

- intro: Indian Institute of Technology Delhi & MSR

- paper: https://manikvarma.github.io/pubs/jain16.pdf

Multi-Label Image Classification with Regional Latent Semantic Dependencies

- intro: Regional Latent Semantic Dependencies model (RLSD), RNN, RPN

- arxiv: https://arxiv.org/abs/1612.01082

Privileged Multi-label Learning

- intro: Peking University & University of Technology Sydney & University of Sydney

- arxiv: https://arxiv.org/abs/1701.07194

Multi-task Learning

Multitask Learning / Domain Adaptation

multi-task learning

- discussion: https://github.com/memect/hao/issues/93

Learning and Transferring Multi-task Deep Representation for Face Alignment

Multi-task learning of facial landmarks and expression

Multi-Task Deep Visual-Semantic Embedding for Video Thumbnail Selection

- intro: CVPR 2015

- paper: http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Liu_Multi-Task_Deep_Visual-Semantic_2015_CVPR_paper.pdf

Learning Multiple Tasks with Deep Relationship Networks

Learning deep representation of multityped objects and tasks

Cross-stitch Networks for Multi-task Learning

Multi-Task Learning in Tensorflow (Part 1)

Deep Multi-Task Learning with Shared Memory

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1609.07222

Learning to Push by Grasping: Using multiple tasks for effective learning

Identifying beneficial task relations for multi-task learning in deep neural networks

- intro: EACL 2017

- arxiv: https://arxiv.org/abs/1702.08303

- github: https://github.com/jbingel/eacl2017_mtl

Multi-Task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics

- intro: University of Cambridge

- arxiv: https://arxiv.org/abs/1705.07115

One Model To Learn Them All

- intro: Google Brain & University of Toronto

- arxiv: https://arxiv.org/abs/1706.05137

- github: https://github.com/tensorflow/tensor2tensor

MultiModel: Multi-Task Machine Learning Across Domains

https://research.googleblog.com/2017/06/multimodel-multi-task-machine-learning.html

An Overview of Multi-Task Learning in Deep Neural Networks

- intro: Aylien Ltd

- arxiv: https://arxiv.org/abs/1706.05098

PackNet: Adding Multiple Tasks to a Single Network by Iterative Pruning

End-to-End Multi-Task Learning with Attention

- intro: Imperial College London

- arxiv: https://arxiv.org/abs/1803.10704

Multi-modal Learning

Multimodal Deep Learning

Multimodal Convolutional Neural Networks for Matching Image and Sentence

- homepage: http://mcnn.noahlab.com.hk/project.html

- paper: http://mcnn.noahlab.com.hk/ICCV2015.pdf

- arxiv: http://arxiv.org/abs/1504.06063

A C++ library for Multimodal Deep Learning

Multimodal Learning for Image Captioning and Visual Question Answering

Multi modal retrieval and generation with deep distributed models

- slides: http://www.slideshare.net/roelofp/multi-modal-retrieval-and-generation-with-deep-distributed-models

- slides: http://pan.baidu.com/s/1kUSjn4z

Learning Aligned Cross-Modal Representations from Weakly Aligned Data

- homepage: http://projects.csail.mit.edu/cmplaces/index.html

- paper: http://projects.csail.mit.edu/cmplaces/content/paper.pdf

Variational methods for Conditional Multimodal Deep Learning

Training and Evaluating Multimodal Word Embeddings with Large-scale Web Annotated Images

- intro: NIPS 2016. University of California & Pinterest

- project page: http://www.stat.ucla.edu/~junhua.mao/multimodal_embedding.html

- arxiv: https://arxiv.org/abs/1611.08321

Deep Multi-Modal Image Correspondence Learning

Multimodal Deep Learning (D4L4 Deep Learning for Speech and Language UPC 2017)

Debugging Deep Learning

Some tips for debugging deep learning

Introduction to debugging neural networks

- blog: http://russellsstewart.com/notes/0.html

- reddit: https://www.reddit.com/r/MachineLearning/comments/4du7gv/introduction_to_debugging_neural_networks

How to Visualize, Monitor and Debug Neural Network Learning

Learning from learning curves

- intro: Kaggle

- blog: https://medium.com/@dsouza.amanda/learning-from-learning-curves-1a82c6f98f49#.o5synrvvl

Understanding CNN

Understanding the Effective Receptive Field in Deep Convolutional Neural Networks

- intro: NIPS 2016

- paper: http://www.cs.toronto.edu/~wenjie/papers/nips16/top.pdf

Deep Learning Networks

PCANet: A Simple Deep Learning Baseline for Image Classification?

- arixv: http://arxiv.org/abs/1404.3606

- code(Matlab): http://mx.nthu.edu.tw/~tsunghan/download/PCANet_demo_pyramid.rar

- mirror: http://pan.baidu.com/s/1mg24b3a

- github(C++): https://github.com/Ldpe2G/PCANet

- github(Python): https://github.com/IshitaTakeshi/PCANet

Convolutional Kernel Networks

- intro: NIPS 2014

- arxiv: http://arxiv.org/abs/1406.3332

Deeply-supervised Nets

- intro: DSN

- arxiv: http://arxiv.org/abs/1409.5185

- homepage: http://vcl.ucsd.edu/~sxie/2014/09/12/dsn-project/

- github: https://github.com/s9xie/DSN

- notes: http://zhangliliang.com/2014/11/02/paper-note-dsn/

FitNets: Hints for Thin Deep Nets

Striving for Simplicity: The All Convolutional Net

- intro: ICLR-2015 workshop

- arxiv: http://arxiv.org/abs/1412.6806

How these researchers tried something unconventional to come out with a smaller yet better Image Recognition.

- intro: All Convolutional Network: (https://arxiv.org/abs/1412.6806#) implementation in Keras

- blog: https://medium.com/@matelabs_ai/how-these-researchers-tried-something-unconventional-to-came-out-with-a-smaller-yet-better-image-544327f30e72#.pfdbvdmuh

- blog: https://github.com/MateLabs/All-Conv-Keras

Pointer Networks

- arxiv: https://arxiv.org/abs/1506.03134

- github: https://github.com/vshallc/PtrNets

- github(TensorFlow): https://github.com/ikostrikov/TensorFlow-Pointer-Networks

- github(TensorFlow): https://github.com/devsisters/pointer-network-tensorflow

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/pointer-networks.md

Pointer Networks in TensorFlow (with sample code)

- blog: https://medium.com/@devnag/pointer-networks-in-tensorflow-with-sample-code-14645063f264#.sxipqfj30

- github: https://github.com/devnag/tensorflow-pointer-networks

Rectified Factor Networks

- arxiv: http://arxiv.org/abs/1502.06464

- github: https://github.com/untom/librfn

Correlational Neural Networks

Diversity Networks

Competitive Multi-scale Convolution

A Unified Approach for Learning the Parameters of Sum-Product Networks (SPN)

- intro: “The Sum-Product Network (SPN) is a new type of machine learning model with fast exact probabilistic inference over many layers.”

- arxiv: http://arxiv.org/abs/1601.00318

- homepage: http://spn.cs.washington.edu/index.shtml

- code: http://spn.cs.washington.edu/code.shtml

Awesome Sum-Product Networks

Recombinator Networks: Learning Coarse-to-Fine Feature Aggregation

- intro: CVPR 2016

- arxiv: http://arxiv.org/abs/1511.07356

- paper: http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Honari_Recombinator_Networks_Learning_CVPR_2016_paper.pdf

- github: https://github.com/SinaHonari/RCN

Dynamic Capacity Networks

- intro: ICML 2016

- arxiv: http://arxiv.org/abs/1511.07838

- github(Tensorflow): https://github.com/beopst/dcn.tf

- review: http://www.erogol.com/1314-2/

Bitwise Neural Networks

- paper: http://paris.cs.illinois.edu/pubs/minje-icmlw2015.pdf

- demo: http://minjekim.com/demo_bnn.html

Learning Discriminative Features via Label Consistent Neural Network

A Theory of Generative ConvNet

- project page: http://www.stat.ucla.edu/~ywu/GenerativeConvNet/main.html

- arxiv: http://arxiv.org/abs/1602.03264

- code: http://www.stat.ucla.edu/~ywu/GenerativeConvNet/doc/code.zip

How to Train Deep Variational Autoencoders and Probabilistic Ladder Networks

Group Equivariant Convolutional Networks (G-CNNs)

Deep Spiking Networks

Low-rank passthrough neural networks

Single Image 3D Interpreter Network

- intro: ECCV 2016 (oral)

- arxiv: https://arxiv.org/abs/1604.08685

Deeply-Fused Nets

SNN: Stacked Neural Networks

Universal Correspondence Network

- intro: NIPS 2016 full oral presentation. Stanford University & NEC Laboratories America

- project page: http://cvgl.stanford.edu/projects/ucn/

- arxiv: https://arxiv.org/abs/1606.03558

Progressive Neural Networks

- intro: Google DeepMind

- arxiv: https://arxiv.org/abs/1606.04671

- github: https://github.com/synpon/prog_nn

- github: https://github.com/yao62995/A3C

Holistic SparseCNN: Forging the Trident of Accuracy, Speed, and Size

Mollifying Networks

- author: Caglar Gulcehre, Marcin Moczulski, Francesco Visin, Yoshua Bengio

- arxiv: http://arxiv.org/abs/1608.04980

Domain Separation Networks

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1608.06019

- github: https://github.com/tensorflow/models/tree/master/domain_adaptation

Local Binary Convolutional Neural Networks

CliqueCNN: Deep Unsupervised Exemplar Learning

- intro: NIPS 2016

- arxiv: http://arxiv.org/abs/1608.08792

- github: https://github.com/asanakoy/cliquecnn

Convexified Convolutional Neural Networks

Multi-scale brain networks

https://arxiv.org/abs/1711.11473

Input Convex Neural Networks

- arxiv: http://arxiv.org/abs/1609.07152

- github: https://github.com/locuslab/icnn

HyperNetworks

- arxiv: https://arxiv.org/abs/1609.09106

- blog: http://blog.otoro.net/2016/09/28/hyper-networks/

- github: https://github.com/hardmaru/supercell/blob/master/assets/MNIST_Static_HyperNetwork_Example.ipynb

HyperLSTM

X-CNN: Cross-modal Convolutional Neural Networks for Sparse Datasets

Tensor Switching Networks

- intro: NIPS 2016

- arixiv: https://arxiv.org/abs/1610.10087

- github: https://github.com/coxlab/tsnet

BranchyNet: Fast Inference via Early Exiting from Deep Neural Networks

- intro: Harvard University

- paper: http://www.eecs.harvard.edu/~htk/publication/2016-icpr-teerapittayanon-mcdanel-kung.pdf

- github: https://github.com/kunglab/branchynet

Spectral Convolution Networks

DelugeNets: Deep Networks with Massive and Flexible Cross-layer Information Inflows

PolyNet: A Pursuit of Structural Diversity in Very Deep Networks

- arxiv: https://arxiv.org/abs/1611.05725

- poster: http://mmlab.ie.cuhk.edu.hk/projects/cu_deeplink/polynet_poster.pdf

Weakly Supervised Cascaded Convolutional Networks

DeepSetNet: Predicting Sets with Deep Neural Networks

- intro: multi-class image classification and pedestrian detection

- arxiv: https://arxiv.org/abs/1611.08998

Steerable CNNs

- intro: University of Amsterdam

- arxiv: https://arxiv.org/abs/1612.08498

Feedback Networks

- project page: http://feedbacknet.stanford.edu/

- arxiv: https://arxiv.org/abs/1612.09508

- youtube: https://youtu.be/MY5Uhv38Ttg

Oriented Response Networks

OptNet: Differentiable Optimization as a Layer in Neural Networks

A fast and differentiable QP solver for PyTorch

- github: https://github.com/locuslab/qpth

Meta Networks

https://arxiv.org/abs/1703.00837

Deformable Convolutional Networks

- intro: ICCV 2017 oral. Microsoft Research Asia

- keywords: deformable convolution, deformable RoI pooling

- arxiv: https://arxiv.org/abs/1703.06211

- sliedes: http://www.jifengdai.org/slides/Deformable_Convolutional_Networks_Oral.pdf

- github(official): https://github.com/msracver/Deformable-ConvNets

- github: https://github.com/felixlaumon/deform-conv

- github: https://github.com/oeway/pytorch-deform-conv

Second-order Convolutional Neural Networks

https://arxiv.org/abs/1703.06817

Gabor Convolutional Networks

https://arxiv.org/abs/1705.01450

Deep Rotation Equivariant Network

https://arxiv.org/abs/1705.08623

Dense Transformer Networks

- intro: Washington State University & University of California, Davis

- arxiv: https://arxiv.org/abs/1705.08881

- github: https://github.com/divelab/dtn

Deep Complex Networks

- intro: [Université de Montréal & INRS-EMT & Microsoft Maluuba

- arxiv: https://arxiv.org/abs/1705.09792

- github: https://github.com/ChihebTrabelsi/deep_complex_networks

Deep Quaternion Networks

- intro: University of Louisiana

- arxiv: https://arxiv.org/abs/1712.04604

DiracNets: Training Very Deep Neural Networks Without Skip-Connections

- intro: Université Paris-Est

- arxiv: https://arxiv.org/abs/1706.00388

- github: https://github.com/szagoruyko/diracnets

Dual Path Networks

- intro: National University of Singapore

- arxiv: https://arxiv.org/abs/1707.01629

- github(MXNet): https://github.com/cypw/DPNs

Primal-Dual Group Convolutions for Deep Neural Networks

Interleaved Group Convolutions for Deep Neural Networks

- intro: ICCV 2017

- keywords: interleaved group convolutional neural networks (IGCNets), IGCV1

- arxiv: https://arxiv.org/abs/1707.02725

- gihtub: https://github.com/hellozting/InterleavedGroupConvolutions

IGCV2: Interleaved Structured Sparse Convolutional Neural Networks

- intro: CVPR 2018

- arxiv: https://arxiv.org/abs/1804.06202

Sensor Transformation Attention Networks

https://arxiv.org/abs/1708.01015

Sparsity Invariant CNNs

https://arxiv.org/abs/1708.06500

SPARCNN: SPAtially Related Convolutional Neural Networks

https://arxiv.org/abs/1708.07522

BranchyNet: Fast Inference via Early Exiting from Deep Neural Networks

https://arxiv.org/abs/1709.01686

Polar Transformer Networks

https://arxiv.org/abs/1709.01889

Tensor Product Generation Networks

https://arxiv.org/abs/1709.09118

Deep Competitive Pathway Networks

- intro: ACML 2017

- arxiv: https://arxiv.org/abs/1709.10282

- github: https://github.com/JiaRenChang/CoPaNet

Context Embedding Networks

https://arxiv.org/abs/1710.01691

Generalization in Deep Learning

- intro: MIT & University of Montreal

- arxiv: https://arxiv.org/abs/1710.05468

Understanding Deep Learning Generalization by Maximum Entropy

- intro: University of Science and Technology of China & Beijing Jiaotong University & Chinese Academy of Sciences

- arxiv: https://arxiv.org/abs/1711.07758

Do Convolutional Neural Networks Learn Class Hierarchy?

- intro: Bosch Research North America & Michigan State University

- arxiv: https://arxiv.org/abs/1710.06501

- video demo: https://vimeo.com/228263798

Deep Hyperspherical Learning

- intro: NIPS 2017

- arxiv: https://arxiv.org/abs/1711.03189

Beyond Sparsity: Tree Regularization of Deep Models for Interpretability

- intro: AAAI 2018

- arxiv: https://arxiv.org/abs/1711.06178

Neural Motifs: Scene Graph Parsing with Global Context

- keywords: Stacked Motif Networks

- arxiv: https://arxiv.org/abs/1711.06640

Priming Neural Networks

https://arxiv.org/abs/1711.05918

Three Factors Influencing Minima in SGD

https://arxiv.org/abs/1711.04623

BPGrad: Towards Global Optimality in Deep Learning via Branch and Pruning

https://arxiv.org/abs/1711.06959

BlockDrop: Dynamic Inference Paths in Residual Networks

- intro: UMD & UT Austin & IBM Research & Fusemachines Inc.

- arxiv: https://arxiv.org/abs/1711.08393

Wasserstein Introspective Neural Networks

https://arxiv.org/abs/1711.08875

SkipNet: Learning Dynamic Routing in Convolutional Networks

https://arxiv.org/abs/1711.09485

Do Convolutional Neural Networks act as Compositional Nearest Neighbors?

- intro: CMU & West Virginia University

- arxiv: https://arxiv.org/abs/1711.10683

ConvNets and ImageNet Beyond Accuracy: Explanations, Bias Detection, Adversarial Examples and Model Criticism

- intro: Facebook AI Research

- arxiv: https://arxiv.org/abs/1711.11443

Broadcasting Convolutional Network

https://arxiv.org/abs/1712.02517

Point-wise Convolutional Neural Network

- intro: Singapore University of Technology and Design

- arxiv: https://arxiv.org/abs/1712.05245

ScreenerNet: Learning Curriculum for Neural Networks

- intro: Intel Corporation & Allen Institute for Artificial Intelligence

- keywords: curricular learning, deep learning, deep q-learning

- arxiv: https://arxiv.org/abs/1801.00904

Sparsely Connected Convolutional Networks

https://arxiv.org/abs/1801.05895

Spherical CNNs

- intro: ICLR 2018

- arxiv: https://arxiv.org/abs/1801.10130

- github(official, PyTorch): https://github.com/jonas-koehler/s2cnn

Going Deeper in Spiking Neural Networks: VGG and Residual Architectures

- intro: Purdue University & Oculus Research & Facebook Research

- arxiv: https://arxiv.org/abs/1802.02627

Rotate your Networks: Better Weight Consolidation and Less Catastrophic Forgetting

https://arxiv.org/abs/1802.02950

Convolutional Neural Networks with Alternately Updated Clique

- intro: CVPR 2018

- arxiv: https://arxiv.org/abs/1802.10419

- github: https://github.com/iboing/CliqueNet

Decoupled Networks

- intro: CVPR 2018 (Spotlight)

- arxiv: https://arxiv.org/abs/1804.08071

Convolutions / Filters

Warped Convolutions: Efficient Invariance to Spatial Transformations

Coordinating Filters for Faster Deep Neural Networks

Shift: A Zero FLOP, Zero Parameter Alternative to Spatial Convolutions

- intro: UC Berkeley

- arxiv: https://arxiv.org/abs/1711.08141

Spatially-Adaptive Filter Units for Deep Neural Networks

- intro: University of Ljubljana & University of Birmingham

- arxiv: https://arxiv.org/abs/1711.11473

clcNet: Improving the Efficiency of Convolutional Neural Network using Channel Local Convolutions

https://arxiv.org/abs/1712.06145

DCFNet: Deep Neural Network with Decomposed Convolutional Filters

https://arxiv.org/abs/1802.04145

Fast End-to-End Trainable Guided Filter

- intro: CVPR 2018

- project page: http://wuhuikai.me/DeepGuidedFilterProject/

- gtihub(official, PyTorch): https://github.com/wuhuikai/DeepGuidedFilter

Diagonalwise Refactorization: An Efficient Training Method for Depthwise Convolutions

- arxiv: https://arxiv.org/abs/1803.09926

- github: https://github.com/clavichord93/diagonalwise-refactorization-tensorflow

Highway Networks

Highway Networks

- intro: ICML 2015 Deep Learning workshop

- intro: shortcut connections with gating functions. These gates are data-dependent and have parameters

- arxiv: http://arxiv.org/abs/1505.00387

- github(PyTorch): https://github.com/analvikingur/pytorch_Highway

Highway Networks with TensorFlow

Very Deep Learning with Highway Networks

- homepage(papers+code+FAQ): http://people.idsia.ch/~rupesh/very_deep_learning/

Training Very Deep Networks

- intro: Extends Highway Networks

- project page: http://people.idsia.ch/~rupesh/very_deep_learning/

- arxiv: http://arxiv.org/abs/1507.06228

Spatial Transformer Networks

Spatial Transformer Networks

- intro: NIPS 2015

- arxiv: http://arxiv.org/abs/1506.02025

- gitxiv: http://gitxiv.com/posts/5WTXTLuEA4Hd8W84G/spatial-transformer-networks

- github: https://github.com/daerduoCarey/SpatialTransformerLayer

- github: https://github.com/qassemoquab/stnbhwd

- github: https://github.com/skaae/transformer_network

- github(Caffe): https://github.com/happynear/SpatialTransformerLayer

- github: https://github.com/daviddao/spatial-transformer-tensorflow

- caffe-issue: https://github.com/BVLC/caffe/issues/3114

- code: https://lasagne.readthedocs.org/en/latest/modules/layers/special.html#lasagne.layers.TransformerLayer

- ipn(Lasagne): http://nbviewer.jupyter.org/github/Lasagne/Recipes/blob/master/examples/spatial_transformer_network.ipynb

- notes: https://www.evernote.com/shard/s189/sh/ad8a38de-9e98-4e06-b09e-574bd62893ff/32f72798c095dd7672f4cb017a32d9b4

- youtube: https://www.youtube.com/watch?v=6NOQC_fl1hQ

The power of Spatial Transformer Networks

- blog: http://torch.ch/blog/2015/09/07/spatial_transformers.html

- github: https://github.com/moodstocks/gtsrb.torch

Recurrent Spatial Transformer Networks

Deep Learning Paper Implementations: Spatial Transformer Networks - Part I

- blog: https://kevinzakka.github.io/2017/01/10/stn-part1/

- github: https://github.com/kevinzakka/blog-code/tree/master/spatial_transformer

Top-down Flow Transformer Networks

https://arxiv.org/abs/1712.02400

Non-Parametric Transformation Networks

- intro: CMU

- arxiv: https://arxiv.org/abs/1801.04520

Hierarchical Spatial Transformer Network

https://arxiv.org/abs/1801.09467

FractalNet

FractalNet: Ultra-Deep Neural Networks without Residuals

- project: http://people.cs.uchicago.edu/~larsson/fractalnet/

- arxiv: http://arxiv.org/abs/1605.07648

- github: https://github.com/gustavla/fractalnet

- github: https://github.com/edgelord/FractalNet

- github(Keras): https://github.com/snf/keras-fractalnet

Architecture Search for Convolutional Neural Networks

Neural Architecture Search with Reinforcement Learning

- intro: Google Brain

- paper: https://openreview.net/pdf?id=r1Ue8Hcxg

Neural Optimizer Search with Reinforcement Learning

- intro: ICML 2017

- arxiv: https://arxiv.org/abs/1709.07417

Learning Transferable Architectures for Scalable Image Recognition

- intro: Google Brain

- keywordss: Neural Architecture Search Network (NASNet), AutoML

- arxiv: https://arxiv.org/abs/1707.07012

- gtihub: https://github.com//titu1994/Keras-NASNet

- blog: https://research.googleblog.com/2017/11/automl-for-large-scale-image.html

- github: https://github.com/titu1994/neural-architecture-search

The First Step-by-Step Guide for Implementing Neural Architecture Search with Reinforcement Learning Using TensorFlow

- blog: https://lab.wallarm.com/the-first-step-by-step-guide-for-implementing-neural-architecture-search-with-reinforcement-99ade71b3d28

- github: https://github.com/wallarm/nascell-automl

Practical Network Blocks Design with Q-Learning

https://arxiv.org/abs/1708.05552

Simple And Efficient Architecture Search for Convolutional Neural Networks

- intro: Bosch Center for Artificial Intelligence & University of Freiburg

- arxiv: https://arxiv.org/abs/1711.04528

Progressive Neural Architecture Search

- intri: Johns Hopkins University & Google Brain & Google Cloud & Stanford University & Google AI

- arxiv: https://arxiv.org/abs/1712.00559

Finding Competitive Network Architectures Within a Day Using UCT

- intro: IBM Research AI – Ireland

- arxiv: https://arxiv.org/abs/1712.07420

Regularized Evolution for Image Classifier Architecture Search

https://arxiv.org/abs/1802.01548

Efficient Neural Architecture Search via Parameters Sharing

- intro: Google Brain & CMU & Stanford University

- arxiv: https://arxiv.org/abs/1802.03268

- github: https://github.com/carpedm20/ENAS-pytorch

- github: https://github.com/melodyguan/enas

Neural Architecture Search with Bayesian Optimisation and Optimal Transport

- intro: CMU

- arxiv: https://arxiv.org/abs/1802.07191

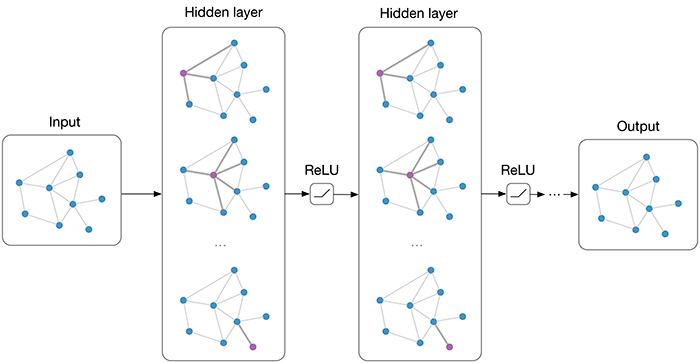

Graph Convolutional Networks

Learning Convolutional Neural Networks for Graphs

- intro: ICML 2016

- arxiv: http://arxiv.org/abs/1605.05273

Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

- arxiv: https://arxiv.org/abs/1606.09375

- github: https://github.com/mdeff/cnn_graph

- github: https://github.com/pfnet-research/chainer-graph-cnn

Semi-Supervised Classification with Graph Convolutional Networks

- arxiv: http://arxiv.org/abs/1609.02907

- github: https://github.com/tkipf/gcn

- blog: http://tkipf.github.io/graph-convolutional-networks/

Graph Based Convolutional Neural Network

- intro: BMVC 2016

- arxiv: http://arxiv.org/abs/1609.08965

How powerful are Graph Convolutions? (review of Kipf & Welling, 2016)

http://www.inference.vc/how-powerful-are-graph-convolutions-review-of-kipf-welling-2016-2/

Graph Convolutional Networks

DeepGraph: Graph Structure Predicts Network Growth

Deep Learning with Sets and Point Clouds

- intro: CMU

- arxiv: https://arxiv.org/abs/1611.04500

Deep Learning on Graphs

Robust Spatial Filtering with Graph Convolutional Neural Networks

https://arxiv.org/abs/1703.00792

Modeling Relational Data with Graph Convolutional Networks

https://arxiv.org/abs/1703.06103

Distance Metric Learning using Graph Convolutional Networks: Application to Functional Brain Networks

- intro: Imperial College London

- arxiv: https://arxiv.org/abs/1703.02161

Deep Learning on Graphs with Graph Convolutional Networks

Deep Learning on Graphs with Keras

- intro:; Keras implementation of Graph Convolutional Networks

- github: https://github.com/tkipf/keras-gcn

Learning Graph While Training: An Evolving Graph Convolutional Neural Network

https://arxiv.org/abs/1708.04675

Residual Gated Graph ConvNets

https://arxiv.org/abs/1711.07553

Probabilistic and Regularized Graph Convolutional Networks

- intro: CMU

- arxiv: https://arxiv.org/abs/1803.04489

Generative Models

Max-margin Deep Generative Models

- intro: NIPS 2015

- arxiv: http://arxiv.org/abs/1504.06787

- github: https://github.com/zhenxuan00/mmdgm

Discriminative Regularization for Generative Models

Auxiliary Deep Generative Models

- arxiv: http://arxiv.org/abs/1602.05473

- github: https://github.com/larsmaaloee/auxiliary-deep-generative-models

Sampling Generative Networks: Notes on a Few Effective Techniques

Conditional Image Synthesis With Auxiliary Classifier GANs

- arxiv: https://arxiv.org/abs/1610.09585

- github: https://github.com/buriburisuri/ac-gan

- github(Keras): https://github.com/lukedeo/keras-acgan

On the Quantitative Analysis of Decoder-Based Generative Models

- intro: University of Toronto & OpenAI & CMU

- arxiv: https://arxiv.org/abs/1611.04273

- github: https://github.com/tonywu95/eval_gen

Boosted Generative Models

An Architecture for Deep, Hierarchical Generative Models

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1612.04739

- github: https://github.com/Philip-Bachman/MatNets-NIPS

Deep Learning and Hierarchal Generative Models

- intro: NIPS 2016. MIT

- arxiv: https://arxiv.org/abs/1612.09057

Probabilistic Torch

- intro: Probabilistic Torch is library for deep generative models that extends PyTorch

- github: https://github.com/probtorch/probtorch

Tutorial on Deep Generative Models

- intro: UAI 2017 Tutorial: Shakir Mohamed & Danilo Rezende (DeepMind)

- youtube: https://www.youtube.com/watch?v=JrO5fSskISY

- mirror: https://www.bilibili.com/video/av16428277/

- slides: http://www.shakirm.com/slides/DeepGenModelsTutorial.pdf

A Note on the Inception Score

- intro: Stanford University

- arxiv: https://arxiv.org/abs/1801.01973

Gradient Layer: Enhancing the Convergence of Adversarial Training for Generative Models

- intro: AISTATS 2018. The University of Tokyo

- arxiv: https://arxiv.org/abs/1801.02227

Deep Learning and Robots

Robot Learning Manipulation Action Plans by “Watching” Unconstrained Videos from the World Wide Web

- intro: AAAI 2015

- paper: http://www.umiacs.umd.edu/~yzyang/paper/YouCookMani_CameraReady.pdf

- author page: http://www.umiacs.umd.edu/~yzyang/

End-to-End Training of Deep Visuomotor Policies

Comment on Open AI’s Efforts to Robot Learning

The Curious Robot: Learning Visual Representations via Physical Interactions

How to build a robot that “sees” with $100 and TensorFlow

Deep Visual Foresight for Planning Robot Motion

- project page: https://sites.google.com/site/brainrobotdata/

- arxiv: https://arxiv.org/abs/1610.00696

- video: https://sites.google.com/site/robotforesight/

Sim-to-Real Robot Learning from Pixels with Progressive Nets

- intro: Google DeepMind

- arxiv: https://arxiv.org/abs/1610.04286

Towards Lifelong Self-Supervision: A Deep Learning Direction for Robotics

A Differentiable Physics Engine for Deep Learning in Robotics

Deep-learning in Mobile Robotics - from Perception to Control Systems: A Survey on Why and Why not

- intro: City University of Hong Kong & Hong Kong University of Science and Technology

- arxiv: https://arxiv.org/abs/1612.07139

Deep Robotic Learning

- intro: https://simons.berkeley.edu/talks/sergey-levine-01-24-2017-1

- youtube: https://www.youtube.com/watch?v=jtjW5Pye_44

Deep Learning in Robotics: A Review of Recent Research

https://arxiv.org/abs/1707.07217

Deep Learning for Robotics

- intro: by Pieter Abbeel

- video: https://www.facebook.com/nipsfoundation/videos/1554594181298482/

- mirror: https://www.bilibili.com/video/av17078186/

- slides: https://www.dropbox.com/s/4fhczb9cxkuqalf/2017_11_xx_BARS-Abbeel.pdf?dl=0

DroNet: Learning to Fly by Driving

- project page: http://rpg.ifi.uzh.ch/dronet.html

- paper: http://rpg.ifi.uzh.ch/docs/RAL18_Loquercio.pdf

- github: https://github.com/uzh-rpg/rpg_public_dronet

A Survey on Deep Learning Methods for Robot Vision

https://arxiv.org/abs/1803.10862

Deep Learning on Mobile / Embedded Devices

Convolutional neural networks on the iPhone with VGGNet

- blog: http://matthijshollemans.com/2016/08/30/vggnet-convolutional-neural-network-iphone/

- github: https://github.com/hollance/VGGNet-Metal

TensorFlow for Mobile Poets

The Convolutional Neural Network(CNN) for Android

- intro: CnnForAndroid:A Classification Project using Convolutional Neural Network(CNN) in Android platform。It also support Caffe Model

- github: https://github.com/zhangqianhui/CnnForAndroid

TensorFlow on Android

Experimenting with TensorFlow on Android

- part 1: https://medium.com/@mgazar/experimenting-with-tensorflow-on-android-pt-1-362683b31838#.5gbp2d4st

- part 2: https://medium.com/@mgazar/experimenting-with-tensorflow-on-android-part-2-12f3dc294eaf#.2gx3o65f5

- github: https://github.com/MostafaGazar/tensorflow

XNOR.ai frees AI from the prison of the supercomputer

Embedded Deep Learning with NVIDIA Jetson

Embedded and mobile deep learning research resources

https://github.com/csarron/emdl

Modeling the Resource Requirements of Convolutional Neural Networks on Mobile Devices

https://arxiv.org/abs/1709.09118

Benchmarks

Deep Learning’s Accuracy

Benchmarks for popular CNN models

- intro: Benchmarks for popular convolutional neural network models on CPU and different GPUs, with and without cuDNN.

- github: https://github.com/jcjohnson/cnn-benchmarks

Deep Learning Benchmarks

http://add-for.com/deep-learning-benchmarks/

cudnn-rnn-benchmarks

Papers

Reweighted Wake-Sleep

Probabilistic Backpropagation for Scalable Learning of Bayesian Neural Networks

- paper: http://arxiv.org/abs/1502.05336

- github: https://github.com/HIPS/Probabilistic-Backpropagation

Deeply-Supervised Nets

Deep learning

- intro: Nature 2015

- author: Yann LeCun, Yoshua Bengio & Geoffrey Hinton

- paper: http://www.cs.toronto.edu/~hinton/absps/NatureDeepReview.pdf

On the Expressive Power of Deep Learning: A Tensor Analysis

Understanding and Predicting Image Memorability at a Large Scale

- intro: MIT. ICCV 2015

- homepage: http://memorability.csail.mit.edu/

- paper: https://people.csail.mit.edu/khosla/papers/iccv2015_khosla.pdf

- code: http://memorability.csail.mit.edu/download.html

- reviews: http://petapixel.com/2015/12/18/how-memorable-are-times-top-10-photos-of-2015-to-a-computer/

Towards Open Set Deep Networks

Structured Prediction Energy Networks

- intro: ICML 2016. SPEN

- arxiv: http://arxiv.org/abs/1511.06350

- github: https://github.com/davidBelanger/SPEN

Deep Neural Networks predict Hierarchical Spatio-temporal Cortical Dynamics of Human Visual Object Recognition

Recent Advances in Convolutional Neural Networks

Understanding Deep Convolutional Networks

DeepCare: A Deep Dynamic Memory Model for Predictive Medicine

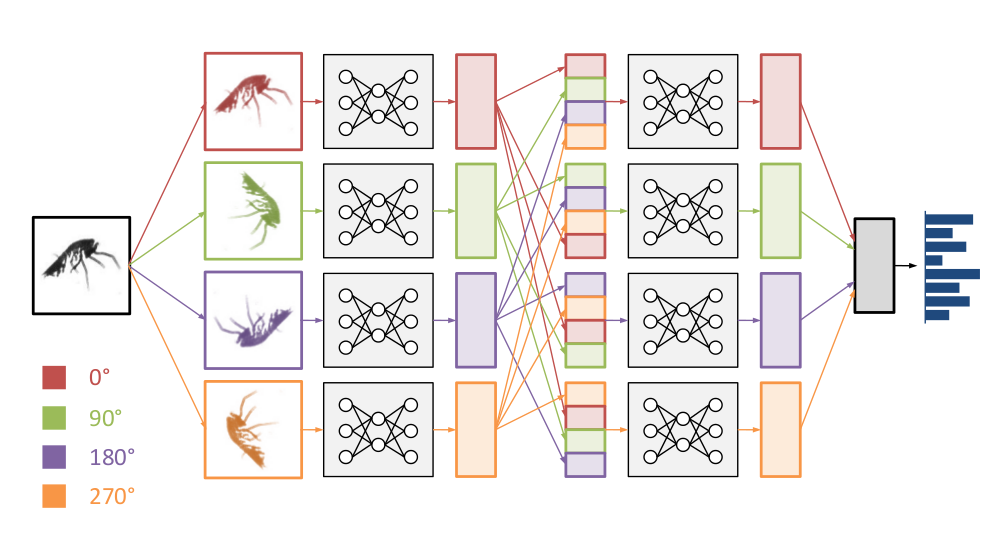

Exploiting Cyclic Symmetry in Convolutional Neural Networks

- intro: ICML 2016

- arxiv: http://arxiv.org/abs/1602.02660

- github(Winning solution for the National Data Science Bowl competition on Kaggle (plankton classification)): https://github.com/benanne/kaggle-ndsb

- ref(use Cyclic pooling): http://benanne.github.io/2015/03/17/plankton.html

Cross-dimensional Weighting for Aggregated Deep Convolutional Features

- arxiv: http://arxiv.org/abs/1512.04065

- github: https://github.com/yahoo/crow

Understanding Visual Concepts with Continuation Learning

- project page: http://willwhitney.github.io/understanding-visual-concepts/

- arxiv: http://arxiv.org/abs/1602.06822

- github: https://github.com/willwhitney/understanding-visual-concepts

Learning Efficient Algorithms with Hierarchical Attentive Memory

- arxiv: http://arxiv.org/abs/1602.03218

- github: https://github.com/Smerity/tf-ham

DrMAD: Distilling Reverse-Mode Automatic Differentiation for Optimizing Hyperparameters of Deep Neural Networks

Do Deep Convolutional Nets Really Need to be Deep (Or Even Convolutional)?

- arxiv: http://arxiv.org/abs/1603.05691

- review: http://www.erogol.com/paper-review-deep-convolutional-nets-really-need-deep-even-convolutional/

Harnessing Deep Neural Networks with Logic Rules

Degrees of Freedom in Deep Neural Networks

Deep Networks with Stochastic Depth

- arxiv: http://arxiv.org/abs/1603.09382

- github: https://github.com/yueatsprograms/Stochastic_Depth

- notes(“Stochastic Depth Networks will Become the New Normal”): http://deliprao.com/archives/134

- github: https://github.com/dblN/stochastic_depth_keras

- github: https://github.com/yasunorikudo/chainer-ResDrop

- review: https://medium.com/@tim_nth/review-deep-networks-with-stochastic-depth-51bd53acfe72

LIFT: Learned Invariant Feature Transform

- intro: ECCV 2016

- arxiv: http://arxiv.org/abs/1603.09114

- github(official): https://github.com/cvlab-epfl/LIFT

Bridging the Gaps Between Residual Learning, Recurrent Neural Networks and Visual Cortex

- arxiv: https://arxiv.org/abs/1604.03640

- slides: http://prlab.tudelft.nl/sites/default/files/rnnResnetCortex.pdf

Understanding How Image Quality Affects Deep Neural Networks

- arxiv: http://arxiv.org/abs/1604.04004

- reddit: https://www.reddit.com/r/MachineLearning/comments/4exk3u/dcnns_are_more_sensitive_to_blur_and_noise_than/

Deep Embedding for Spatial Role Labeling

- arxiv: http://arxiv.org/abs/1603.08474

- github: https://github.com/oswaldoludwig/visually-informed-embedding-of-word-VIEW-

Unreasonable Effectiveness of Learning Neural Nets: Accessible States and Robust Ensembles

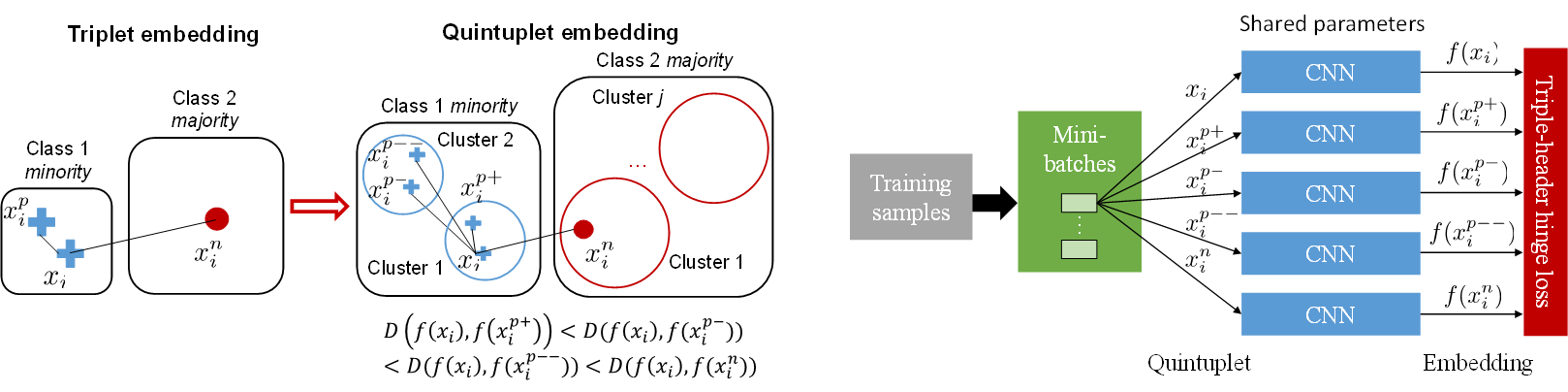

Learning Deep Representation for Imbalanced Classification

- intro: CVPR 2016

- keywords: Deep Learning Large Margin Local Embedding (LMLE)

- project page: http://mmlab.ie.cuhk.edu.hk/projects/LMLE.html

- paper: http://personal.ie.cuhk.edu.hk/~ccloy/files/cvpr_2016_imbalanced.pdf

- code: http://mmlab.ie.cuhk.edu.hk/projects/LMLE/lmle_code.zip

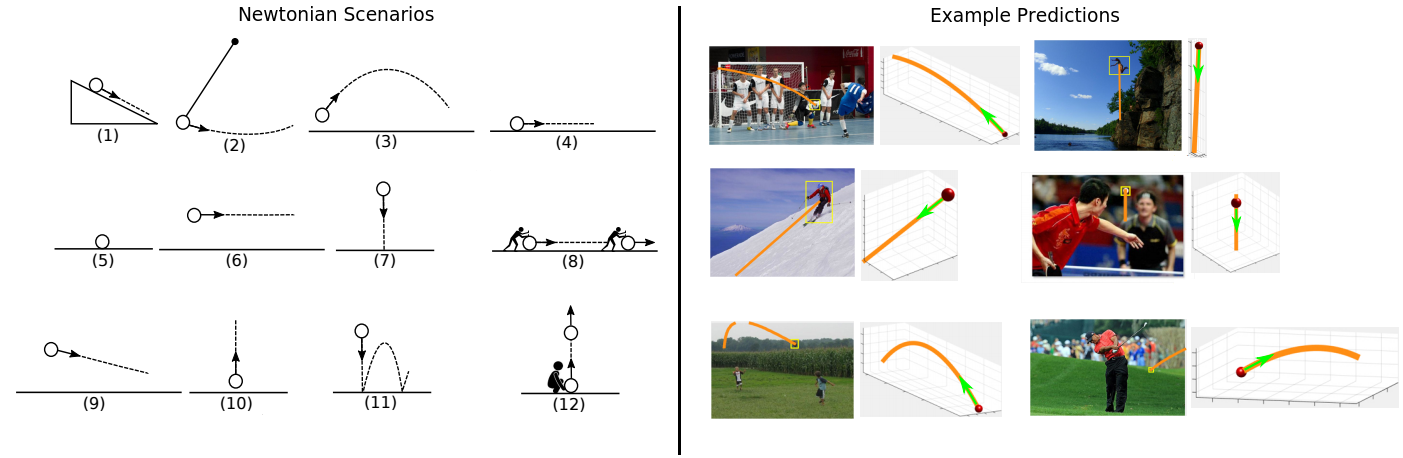

Newtonian Image Understanding: Unfolding the Dynamics of Objects in Static Images

- homepage: http://allenai.org/plato/newtonian-understanding/

- arxiv: http://arxiv.org/abs/1511.04048

- github: https://github.com/roozbehm/newtonian

DeepMath - Deep Sequence Models for Premise Selection

Convolutional Neural Networks Analyzed via Convolutional Sparse Coding

Systematic evaluation of CNN advances on the ImageNet

Why does deep and cheap learning work so well?

- intro: Harvard and MIT

- arxiv: http://arxiv.org/abs/1608.08225

- review: https://www.technologyreview.com/s/602344/the-extraordinary-link-between-deep-neural-networks-and-the-nature-of-the-universe/

A scalable convolutional neural network for task-specified scenarios via knowledge distillation

Alternating Back-Propagation for Generator Network

- project page(code+data): http://www.stat.ucla.edu/~ywu/ABP/main.html

- paper: http://www.stat.ucla.edu/~ywu/ABP/doc/arXivABP.pdf

A Novel Representation of Neural Networks

Optimization of Convolutional Neural Network using Microcanonical Annealing Algorithm

- intro: IEEE ICACSIS 2016

- arxiv: https://arxiv.org/abs/1610.02306

Uncertainty in Deep Learning

- intro: PhD Thesis. Cambridge Machine Learning Group

- blog: http://mlg.eng.cam.ac.uk/yarin/blog_2248.html

- thesis: http://mlg.eng.cam.ac.uk/yarin/thesis/thesis.pdf

Deep Convolutional Neural Network Design Patterns

Extensions and Limitations of the Neural GPU

Neural Functional Programming

Deep Information Propagation

Compressed Learning: A Deep Neural Network Approach

A backward pass through a CNN using a generative model of its activations

Understanding deep learning requires rethinking generalization

- intro: ICLR 2017 best paper. MIT & Google Brain & UC Berkeley & Google DeepMind

- arxiv: https://arxiv.org/abs/1611.03530

- example code: https://github.com/pluskid/fitting-random-labels

- notes: https://theneuralperspective.com/2017/01/24/understanding-deep-learning-requires-rethinking-generalization/

Learning the Number of Neurons in Deep Networks

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1611.06321

Survey of Expressivity in Deep Neural Networks

- intro: Presented at NIPS 2016 Workshop on Interpretable Machine Learning in Complex Systems

- intro: Google Brain & Cornell University & Stanford University

- arxiv: https://arxiv.org/abs/1611.08083

Designing Neural Network Architectures using Reinforcement Learning

- intro: MIT

- project page: https://bowenbaker.github.io/metaqnn/

- arxiv: https://arxiv.org/abs/1611.02167

Towards Robust Deep Neural Networks with BANG

- intro: University of Colorado

- arxiv: https://arxiv.org/abs/1612.00138

Deep Quantization: Encoding Convolutional Activations with Deep Generative Model

- intro: University of Science and Technology of China & MSR

- arxiv: https://arxiv.org/abs/1611.09502

A Probabilistic Theory of Deep Learning

A Probabilistic Framework for Deep Learning

- intro: Rice University

- arxiv: https://arxiv.org/abs/1612.01936

Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Transfer

- arxiv: https://arxiv.org/abs/1612.03928

- github(PyTorch): https://github.com/szagoruyko/attention-transfer

Risk versus Uncertainty in Deep Learning: Bayes, Bootstrap and the Dangers of Dropout

- intro: Google Deepmind

- paper: http://bayesiandeeplearning.org/papers/BDL_4.pdf

Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer

- intro: Google Brain & Jagiellonian University

- keywords: Sparsely-Gated Mixture-of-Experts layer (MoE), language modeling and machine translation

- arxiv: https://arxiv.org/abs/1701.06538

- reddit: https://www.reddit.com/r/MachineLearning/comments/5pud72/research_outrageously_large_neural_networks_the/

Deep Network Guided Proof Search

- intro: Google Research & University of Innsbruck

- arxiv: https://arxiv.org/abs/1701.06972

PathNet: Evolution Channels Gradient Descent in Super Neural Networks

- intro: Google DeepMind & Google Brain

- arxiv: https://arxiv.org/abs/1701.08734

- notes: https://medium.com/intuitionmachine/pathnet-a-modular-deep-learning-architecture-for-agi-5302fcf53273#.8f0o6w3en

Reluplex: An Efficient SMT Solver for Verifying Deep Neural Networks

The Power of Sparsity in Convolutional Neural Networks

Learning across scales - A multiscale method for Convolution Neural Networks

Stacking-based Deep Neural Network: Deep Analytic Network on Convolutional Spectral Histogram Features

A Compositional Object-Based Approach to Learning Physical Dynamics

- intro: ICLR 2017. Neural Physics Engine

- paper: https://openreview.net/pdf?id=Bkab5dqxe

- github: https://github.com/mbchang/dynamics

Genetic CNN

- arxiv: https://arxiv.org/abs/1703.01513

- github(Tensorflow): https://github.com/aqibsaeed/Genetic-CNN

Deep Sets

- intro: Amazon Web Services & CMU

- keywords: statistic estimation, point cloud classification, set expansion, and image tagging

- arxiv: https://arxiv.org/abs/1703.06114

Multiscale Hierarchical Convolutional Networks

https://arxiv.org/abs/1703.04140 https://github.com/jhjacobsen/HierarchicalCNN

Deep Neural Networks Do Not Recognize Negative Images

https://arxiv.org/abs/1703.06857

Failures of Deep Learning

Multi-Scale Dense Convolutional Networks for Efficient Prediction

- intro: Cornell University & Tsinghua University & Fudan University & Facebook AI Research

- arxiv: https://arxiv.org/abs/1703.09844

- github: https://github.com/gaohuang/MSDNet

Scaling the Scattering Transform: Deep Hybrid Networks

- arxiv: https://arxiv.org/abs/1703.08961

- github: https://github.com/edouardoyallon/scalingscattering

- github(CuPy/PyTorch): https://github.com/edouardoyallon/pyscatwave

Deep Learning is Robust to Massive Label Noise

https://arxiv.org/abs/1705.10694

Input Fast-Forwarding for Better Deep Learning

- intro: ICIAR 2017

- keywords: Fast-Forward Network (FFNet)

- arxiv: https://arxiv.org/abs/1705.08479

Deep Mutual Learning

- keywords: deep mutual learning (DML)

- arxiv: https://arxiv.org/abs/1706.00384

Automated Problem Identification: Regression vs Classification via Evolutionary Deep Networks

- intro: University of Cape Town

- arxiv: https://arxiv.org/abs/1707.00703

Revisiting Unreasonable Effectiveness of Data in Deep Learning Era

- intro: Google Research & CMU

- arxiv: https://arxiv.org/abs/1707.02968

- blog: https://research.googleblog.com/2017/07/revisiting-unreasonable-effectiveness.html

Deep Layer Aggregation

- intro: UC Berkeley

- arxiv: https://arxiv.org/abs/1707.06484

Improving Robustness of Feature Representations to Image Deformations using Powered Convolution in CNNs

https://arxiv.org/abs/1707.07830

Learning uncertainty in regression tasks by deep neural networks

- intro: Free University of Berlin

- arxiv: https://arxiv.org/abs/1707.07287

Generalizing the Convolution Operator in Convolutional Neural Networks

https://arxiv.org/abs/1707.09864

Convolution with Logarithmic Filter Groups for Efficient Shallow CNN

https://arxiv.org/abs/1707.09855

Deep Multi-View Learning with Stochastic Decorrelation Loss

https://arxiv.org/abs/1707.09669

Take it in your stride: Do we need striding in CNNs?

https://arxiv.org/abs/1712.02502

Security Risks in Deep Learning Implementation

- intro: Qihoo 360 Security Research Lab & University of Georgia & University of Virginia

- arxiv: https://arxiv.org/abs/1711.11008

Online Learning with Gated Linear Networks

- intro: DeepMind

- arxiv: https://arxiv.org/abs/1712.01897

On the Information Bottleneck Theory of Deep Learning

https://openreview.net/forum?id=ry_WPG-A-¬eId=ry_WPG-A

The Unreasonable Effectiveness of Deep Features as a Perceptual Metric

- project page: https://richzhang.github.io/PerceptualSimilarity/

- arxiv: https://arxiv.org/abs/1801.03924

- github: https://github.com//richzhang/PerceptualSimilarity

Less is More: Culling the Training Set to Improve Robustness of Deep Neural Networks

- intro: University of California, Davis

- arxiv: https://arxiv.org/abs/1801.02850

Towards an Understanding of Neural Networks in Natural-Image Spaces

https://arxiv.org/abs/1801.09097

Deep Private-Feature Extraction

https://arxiv.org/abs/1802.03151

Not All Samples Are Created Equal: Deep Learning with Importance Sampling

- intro: Idiap Research Institute

- arxiv: https://arxiv.org/abs/1803.00942

Tutorials and Surveys

A Survey: Time Travel in Deep Learning Space: An Introduction to Deep Learning Models and How Deep Learning Models Evolved from the Initial Ideas

On the Origin of Deep Learning

- intro: CMU. 70 pages, 200 references

- arxiv: https://arxiv.org/abs/1702.07800

Efficient Processing of Deep Neural Networks: A Tutorial and Survey

- intro: MIT

- arxiv: https://arxiv.org/abs/1703.09039

The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches

{https://arxiv.org/abs/1803.01164}(https://arxiv.org/abs/1803.01164)

Mathematics of Deep Learning

A Mathematical Theory of Deep Convolutional Neural Networks for Feature Extraction

Mathematics of Deep Learning

- intro: Johns Hopkins University & New York University & Tel-Aviv University & University of California, Los Angeles

- arxiv: https://arxiv.org/abs/1712.04741

Local Minima

Local minima in training of deep networks

- intro: DeepMind

- arxiv: https://arxiv.org/abs/1611.06310

Deep linear neural networks with arbitrary loss: All local minima are global

- intro: CMU & University of Southern California & Facebook Artificial Intelligence Research

- arxiv: https://arxiv.org/abs/1712.00779

Gradient Descent Learns One-hidden-layer CNN: Don’t be Afraid of Spurious Local Minima

- intro: Loyola Marymount University & California State University

- arxiv: https://arxiv.org/abs/1712.01473

CNNs are Globally Optimal Given Multi-Layer Support

- intro: CMU

- arxiv: https://arxiv.org/abs/1712.02501

Spurious Local Minima are Common in Two-Layer ReLU Neural Networks

https://arxiv.org/abs/1712.08968

Dive Into CNN

Structured Receptive Fields in CNNs

How ConvNets model Non-linear Transformations

Separable Convolutions / Grouped Convolutions

Factorized Convolutional Neural Networks

Design of Efficient Convolutional Layers using Single Intra-channel Convolution, Topological Subdivisioning and Spatial “Bottleneck” Structure

STDP

A biological gradient descent for prediction through a combination of STDP and homeostatic plasticity

An objective function for STDP

Towards a Biologically Plausible Backprop

Target Propagation

How Auto-Encoders Could Provide Credit Assignment in Deep Networks via Target Propagation

Difference Target Propagation

- arxiv: http://arxiv.org/abs/1412.7525

- github: https://github.com/donghyunlee/dtp

Zero Shot Learning

Learning a Deep Embedding Model for Zero-Shot Learning

Zero-Shot (Deep) Learning

https://amundtveit.com/2016/11/18/zero-shot-deep-learning/

Zero-shot learning experiments by deep learning.

https://github.com/Elyorcv/zsl-deep-learning

Zero-Shot Learning - The Good, the Bad and the Ugly

- intro: CVPR 2017

- arxiv: https://arxiv.org/abs/1703.04394

Semantic Autoencoder for Zero-Shot Learning

- intro: CVPR 2017

- project page: https://elyorcv.github.io/projects/sae

- arxiv: https://arxiv.org/abs/1704.08345

- github: https://github.com/Elyorcv/SAE

Zero-Shot Learning via Category-Specific Visual-Semantic Mapping

https://arxiv.org/abs/1711.06167

Zero-Shot Learning via Class-Conditioned Deep Generative Models

- intro: AAAI 2018

- arxiv: https://arxiv.org/abs/1711.05820

Feature Generating Networks for Zero-Shot Learning

https://arxiv.org/abs/1712.00981

Zero-Shot Visual Recognition using Semantics-Preserving Adversarial Embedding Network

https://arxiv.org/abs/1712.01928

Combining Deep Universal Features, Semantic Attributes, and Hierarchical Classification for Zero-Shot Learning

- intro: extension to work published in conference proceedings of 2017 IAPR MVA Conference

- arxiv: https://arxiv.org/abs/1712.03151

Multi-Context Label Embedding

- keywords: Multi-Context Label Embedding (MCLE)

- arxiv: https://arxiv.org/abs/1805.01199

Incremental Learning

iCaRL: Incremental Classifier and Representation Learning

FearNet: Brain-Inspired Model for Incremental Learning

https://arxiv.org/abs/1711.10563

Incremental Learning in Deep Convolutional Neural Networks Using Partial Network Sharing

- intro: Purdue University

- arxiv: https://arxiv.org/abs/1712.02719

Incremental Classifier Learning with Generative Adversarial Networks

https://arxiv.org/abs/1802.00853

Ensemble Deep Learning

Convolutional Neural Fabrics

- intro: NIPS 2016

- arxiv: http://arxiv.org/abs/1606.02492

- github: https://github.com/shreyassaxena/convolutional-neural-fabrics

Stochastic Multiple Choice Learning for Training Diverse Deep Ensembles

- arxiv: https://arxiv.org/abs/1606.07839

- youtube: https://www.youtube.com/watch?v=KjUfMtZjyfg&feature=youtu.be

Snapshot Ensembles: Train 1, Get M for Free

- paper: http://openreview.net/pdf?id=BJYwwY9ll

- github(Torch): https://github.com/gaohuang/SnapshotEnsemble

- github: https://github.com/titu1994/Snapshot-Ensembles

Ensemble Deep Learning

Domain Adaptation

Adversarial Discriminative Domain Adaptation

- intro: UC Berkeley & Stanford University & Boston University

- arxiv: https://arxiv.org/abs/1702.05464

- github: https://github.com//corenel/pytorch-adda

Parameter Reference Loss for Unsupervised Domain Adaptation

https://arxiv.org/abs/1711.07170

Residual Parameter Transfer for Deep Domain Adaptation

https://arxiv.org/abs/1711.07714

Adversarial Feature Augmentation for Unsupervised Domain Adaptation

https://arxiv.org/abs/1711.08561

Image to Image Translation for Domain Adaptation

https://arxiv.org/abs/1712.00479

Incremental Adversarial Domain Adaptation

https://arxiv.org/abs/1712.07436

Deep Visual Domain Adaptation: A Survey

https://arxiv.org/abs/1802.03601

Unsupervised Domain Adaptation: A Multi-task Learning-based Method

https://arxiv.org/abs/1803.09208

Importance Weighted Adversarial Nets for Partial Domain Adaptation

https://arxiv.org/abs/1803.09210

Open Set Domain Adaptation by Backpropagation

https://arxiv.org/abs/1804.10427

Embedding

Learning Deep Embeddings with Histogram Loss

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1611.00822

Full-Network Embedding in a Multimodal Embedding Pipeline

https://arxiv.org/abs/1707.09872

Clustering-driven Deep Embedding with Pairwise Constraints

https://arxiv.org/abs/1803.08457

Regression

A Comprehensive Analysis of Deep Regression

https://arxiv.org/abs/1803.08450

CapsNets

Dynamic Routing Between Capsules

- intro: Sara Sabour, Nicholas Frosst, Geoffrey E Hinton

- intro: Google Brain, Toronto

- arxiv: https://arxiv.org/abs/1710.09829

- github(official, Tensorflow): https://github.com/Sarasra/models/tree/master/research/capsules

Capsule Networks (CapsNets) – Tutorial

- youtube: https://www.youtube.com/watch?v=pPN8d0E3900

- mirror: http://www.bilibili.com/video/av16594836/

Improved Explainability of Capsule Networks: Relevance Path by Agreement

- intro: Concordia University & University of Toronto

- arxiv: https://arxiv.org/abs/1802.10204

Computer Vision

A Taxonomy of Deep Convolutional Neural Nets for Computer Vision

On the usability of deep networks for object-based image analysis

- intro: GEOBIA 2016

- arxiv: http://arxiv.org/abs/1609.06845

Learning Recursive Filters for Low-Level Vision via a Hybrid Neural Network

- intro: ECCV 2016

- project page: http://www.sifeiliu.net/linear-rnn

- paper: http://faculty.ucmerced.edu/mhyang/papers/eccv16_rnn_filter.pdf

- poster: http://www.eccv2016.org/files/posters/O-3A-03.pdf

- github: https://github.com/Liusifei/caffe-lowlevel

DSAC - Differentiable RANSAC for Camera Localization

Toward Geometric Deep SLAM

- intro: Magic Leap, Inc

- arxiv: https://arxiv.org/abs/1707.07410

All-In-One Network

HyperFace: A Deep Multi-task Learning Framework for Face Detection, Landmark Localization, Pose Estimation, and Gender Recognition

- arxiv: https://arxiv.org/abs/1603.01249

- summary: https://github.com/aleju/papers/blob/master/neural-nets/HyperFace.md

UberNet: Training a `Universal’ Convolutional Neural Network for Low-, Mid-, and High-Level Vision using Diverse Datasets and Limited Memory

An All-In-One Convolutional Neural Network for Face Analysis

- intro: simultaneous face detection, face alignment, pose estimation, gender recognition, smile detection, age estimation and face recognition

- arxiv: https://arxiv.org/abs/1611.00851

MultiNet: Real-time Joint Semantic Reasoning for Autonomous Driving

- intro: first place on Kitti Road Segmentation. joint classification, detection and semantic segmentation via a unified architecture, less than 100 ms to perform all tasks

- arxiv: https://arxiv.org/abs/1612.07695

- github: https://github.com/MarvinTeichmann/MultiNet

Deep Learning for Data Structures

The Case for Learned Index Structures

- intro: MIT & Google

- keywords: B-Tree-Index, Hash-Index, BitMap-Index

- arxiv: https://arxiv.org/abs/1712.01208

Projects

Top Deep Learning Projects

deepnet: Implementation of some deep learning algorithms

DeepNeuralClassifier(Julia): Deep neural network using rectified linear units to classify hand written digits from the MNIST dataset

Clarifai Node.js Demo

- github: https://github.com/patcat/Clarifai-Node-Demo

- blog(“How to Make Your Web App Smarter with Image Recognition”): http://www.sitepoint.com/how-to-make-your-web-app-smarter-with-image-recognition/

Deep Learning in Rust

- blog(“baby steps”): https://medium.com/@tedsta/deep-learning-in-rust-7e228107cccc#.t0pskuwkm

- blog(“a walk in the park”): https://medium.com/@tedsta/deep-learning-in-rust-a-walk-in-the-park-fed6c87165ea#.pucj1l5yx

- github: https://github.com/tedsta/deeplearn-rs

Implementation of state-of-art models in Torch

Deep Learning (Python, C, C++, Java, Scala, Go)

deepmark: THE Deep Learning Benchmarks

Siamese Net